Originally Published 11/12/2014

So we had a customer that had a slow application but wasn’t sure what queries were causing them problems. We provide access to our customers to see a dashboard that allows them to drill down into waits to find slow responding queries, but the interface was too much for them to digest. Other database platforms that I support allow you to log queries running longer than a given amount of time to a log so that they can be analyzed. I thought it would be helpful to provide the same functionality to these customers to help them identify their problem areas. I’ve been looking at an excuse to play with Extended Events and thought this would be a good opportunity to help out my customers and learn something new.

The plan was was to use Extended Events to log queries taking more than 500 milliseconds to a file. I could have used other targets, but I am thinking of possibly throwing this data into Hadoop later. (If I do, I’ll be sure to blog about that as well.) Setting up the Extended Event Session was not that difficult. I based it off of Paul Randal’s blog post on logging queries that were expensive based on CPU. After modifying it to filter based on duration and not CPU as well as removing some noise from the results this is the script I used to set up and start the Extended Event Session.

USE MASTER;

GO

/* Conditionally drop the session if it already exists */

IF EXISTS (SELECT * FROM sys.server_event_sessions WHERE name = 'EE_SlowQueryLog')

DROP EVENT SESSION EE_SlowQueryLog ON SERVER;

GO

/* Create the session */

CREATE EVENT SESSION EE_SlowQueryLog ON SERVER

ADD EVENT sqlserver.sql_statement_completed

( ACTION (

sqlserver.client_app_name

,sqlserver.client_hostname

,sqlserver.database_id

,sqlserver.database_name

,sqlserver.plan_handle

,sqlserver.sql_text

,sqlserver.username)

WHERE duration > 500000 /* 500 milliseconds in microseconds */

AND sql_text NOT LIKE 'WAITFOR (RECEIVE message_body FROM WMIEventProviderNotificationQueue)%' /* Exclude WMI waits */

AND sql_text NOT LIKE '%sp_GetSlowQueries%' /* Exclude traffic from stored procedure to read data */)

ADD TARGET package0.asynchronous_file_target

( SET FILENAME = N'C:\ModernDBA\EE_SlowQueryLog.xel', METADATAFILE = N'C:\ModernDBA\EE_SlowQueryLog.xem')

WITH (max_dispatch_latency = 1 seconds);

GO

/* Start Session */

ALTER EVENT SESSION EE_SlowQueryLog ON SERVER STATE = START;

GO

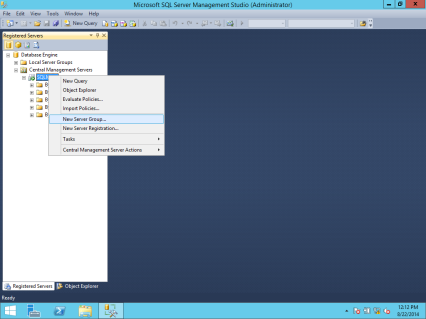

This script sets up a collection and stores the queries in a file on the operating system. But instead of having to grant everyone access to the file to see the contents I created a stored procedure that read the contents of the file and formatted them into something more useful. I started with the select in Paul Randal’s blog post but in order to run the sys.fn_xe_file_target_read_file function you need to have VIEW SERVER STATE permissions on the server. I wasn’t excited about granting everyone access to this, so I decided to sign the procedure with a cert to allow permissions to everyone. This process was modeled after the SQL Server Separation of Duties Framework.

The process involves making sure that master keys exist in both the master and ModernDBA databases. Then setting up a certificate that matches in both databases. Then you can associate the certificate with a login that has the VIEW SERVER STATE permission and use the certificate to sign the procedure in the ModernDBA database. This will allow the procedure to run as the login and get to the slow query log files. Before you run the script below you will want to change the password to something a bit more secure.

/* THIS QUERY NEEDS TO BE RUN IN SQLCMD MODE */

/* To enable SQLCMD mode in SSMS use the Query Menu and select SQLCMD */

:SETVAR Password "Password1!"

USE MASTER;

GO

/* Conditionally create the ModernDBA database */

IF NOT EXISTS(

SELECT * FROM sys.databases WHERE name = 'ModernDBA'

)

CREATE DATABASE ModernDBA;

GO

/* Create a Database Master Key (DMK) in the master database */

IF NOT EXISTS (SELECT * FROM sys.symmetric_keys WHERE name LIKE '%databasemasterkey%')

BEGIN

CREATE MASTER KEY ENCRYPTION BY PASSWORD = '$(Password)';

END

GO

/* Create a certificate on the server. */

IF NOT EXISTS(SELECT * FROM sys.certificates WHERE name = 'cert_SlowQueryLog')

CREATE CERTIFICATE cert_SlowQueryLog WITH SUBJECT = 'Sign Slow Query Log Procedure';

GO

/* Backup Certificate to file */

BACKUP CERTIFICATE cert_SlowQueryLog TO FILE = 'C:\ModernDBA\cert_SlowQueryLog.cer'

WITH PRIVATE KEY ( FILE = 'C:\ModernDBA\cert_SlowQueryLog.pvk' ,

ENCRYPTION BY PASSWORD = '$(Password)' );

GO

/* Create a login and map it to the certificate. */

IF NOT EXISTS(SELECT * FROM sys.server_principals WHERE name = 'login_SlowQueryLog')

CREATE LOGIN login_SlowQueryLog FROM CERTIFICATE cert_SlowQueryLog;

GO

/* Revoke the ability for this user to connect */

REVOKE CONNECT SQL FROM login_SlowQueryLog;

GO

/* Grant VIEW Server State to User */

GRANT VIEW SERVER STATE TO login_SlowQueryLog

GO

USE ModernDBA;

GO

--Create the DMK in the user database

--Import the certificate

IF NOT EXISTS (SELECT * FROM sys.symmetric_keys WHERE name LIKE '%databasemasterkey%')

BEGIN

CREATE MASTER KEY ENCRYPTION BY PASSWORD = '$(Password)';

END

GO

IF NOT EXISTS (SELECT * FROM sys.certificates WHERE name = 'cert_SlowQueryLog')

BEGIN

CREATE CERTIFICATE cert_SlowQueryLog FROM FILE = 'C:\ModernDBA\cert_SlowQueryLog.cer'

WITH PRIVATE KEY

(FILE = 'C:\ModernDBA\cert_SlowQueryLog.pvk',

DECRYPTION BY PASSWORD= '$(Password)')

END

GO

/* Create Stored Procedure */

IF EXISTS (SELECT * FROM INFORMATION_SCHEMA.ROUTINES WHERE ROUTINE_NAME = 'sp_GetSlowQueries')

DROP PROCEDURE dbo.sp_GetSlowQueries

GO

CREATE PROCEDURE dbo.sp_GetSlowQueries

WITH ENCRYPTION

AS

BEGIN

SET NOCOUNT ON

SELECT

data.value ('(/event[@name=''sql_statement_completed'']/@timestamp)[1]', 'DATETIME') AS [Time]

, CONVERT (FLOAT, data.value ('(/event/data[@name=''duration'']/value)[1]', 'BIGINT')) / 1000000 AS [Duration (s)]

, CONVERT (FLOAT, data.value ('(/event/data[@name=''cpu_time'']/value)[1]', 'BIGINT')) / 1000000 AS [CPU Time (s)]

, data.value ('(/event/data[@name=''physical_reads'']/value)[1]', 'INT') AS [Physical Reads (# page Reads)]

, data.value ('(/event/data[@name=''logical_reads'']/value)[1]', 'INT') AS [Logical Reads (# page Reads)]

, data.value ('(/event/data[@name=''writes'']/value)[1]', 'INT') AS [Writes (# Page writes)]

, data.value ('(/event/action[@name=''username'']/value)[1]', 'VARCHAR(MAX)') AS [User]

, data.value ('(/event/action[@name=''database_name'']/value)[1]', 'VARCHAR(MAX)') AS [Database]

, data.value ('(/event/action[@name=''sql_text'']/value)[1]', 'VARCHAR(MAX)') AS [SQL Statement]

, SUBSTRING (data.value ('(/event/action[@name=''plan_handle'']/value)[1]', 'VARCHAR(100)'), 15, 50) AS [Plan Handle]

FROM

(SELECT CONVERT (XML, event_data) AS data FROM sys.fn_xe_file_target_read_file

('C:\ModernDBA\EE_SlowQueryLog*.xel', 'C:\ModernDBA\EE_SlowQueryLog*.xem', NULL, NULL)

) entries

ORDER BY [Time] DESC;

END

GO

/* Add Certificate to procedure */

ADD SIGNATURE TO dbo.sp_GetSlowQueries

BY CERTIFICATE cert_SlowQueryLog

GO

/* Grant Execute to everyone */

GRANT EXECUTE ON dbo.sp_GetSlowQueries TO [public];

Now that the query is created all we have to do is grant any users we want to be able to see the slow query log access to the public role on the ModernDBA database. To do that we can use this script.

/* THIS QUERY NEEDS TO BE RUN IN SQLCMD MODE */

/* To enable SQLCMD mode in SSMS use the Query Menu and select SQLCMD */

:SETVAR Login "testme"

USE [ModernDBA]

GO

CREATE USER [$(Login)] FOR LOGIN [$(Login)]

GO

Now that we have granted the user access to the stored procedure they can perform filter selects against the data using common TSQL techniques. One such method is to load the data into a temp table as shown below. While this query returns all the data you could easily modify the SELECT statement to only return the rows of interest.

CREATE TABLE #SlowQueries (

[time] DATETIME

, [duration] FLOAT

, [cpu] FLOAT

, [physical_reads] BIGINT

, [logical_reads] BIGINT

, [writes] BIGINT

, [user] NVARCHAR(128)

, [database] NVARCHAR(128)

, [text] VARCHAR(MAX)

, [plan_handle] VARCHAR(100))

INSERT #SlowQueries ([time], [duration], [cpu], [physical_reads], [logical_reads], [writes], [user], [database], [text], [plan_handle])

EXEC sp_GetSlowQueries;

SELECT *

FROM #SlowQueries;

DROP TABLE #SlowQueries;

This process will store 5 1GB files worth of slow queries that can be looked at by the customer. Performance of reading the files in is a bit on the slow side once you have some data in them. That is why I have excluded the stored procedure from the Extended Event Session. Longer term, I may load this data into a table and grant access to the table. But for now this helped my customers identify queries that needed tuning and was relatively easy to set up.

How have you set up access for your customers to see slow performing queries in SQL Server?

You must be logged in to post a comment.